Sep 13, 13130

Mar 1, 1010

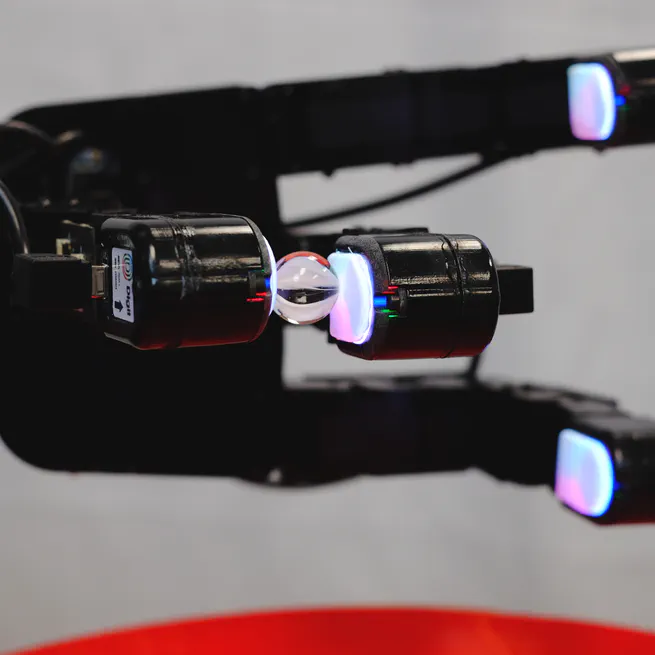

We design and demonstrate a new tactile sensor for in-hand tactile manipulation in a robotic hand.

May 17, 17170

High-resolution tactile sensing together with visual approaches to prediction and planning with deep neural networks enables high-precision tactile servoing tasks.

Jan 1, 1010

Jan 1, 1010

By exploiting high precision tactile sensing with deep learning, robots can effectively iteratively adjust their grasp configurations to boost grasping performance from 65% to 94%.

Jan 1, 1010

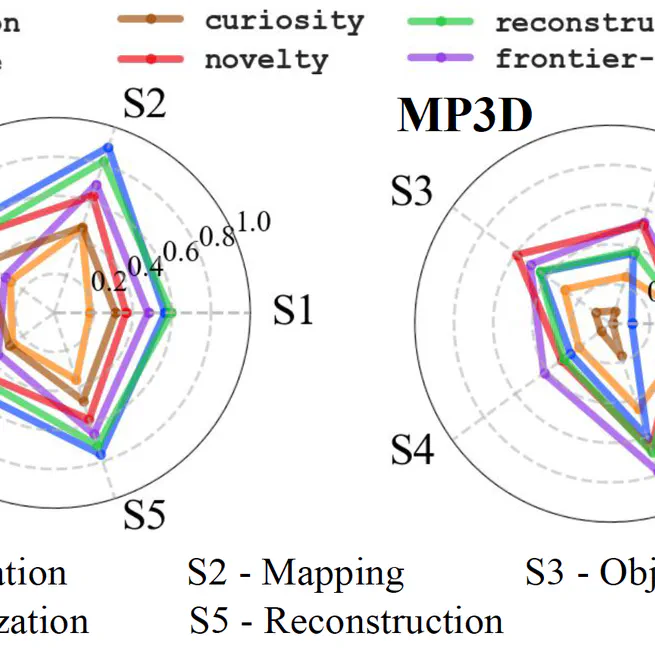

Task-agnostic visual exploration policies may be trained through a proxy "observation completion" task that requires an agent to "paint" unobserved views given a small set of observed views.

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010

An agent's continuous visual observations include information about how the world responds to its actions. This can provide an effective source of self-supervision for learning visual representations.

Jan 1, 1010

Jan 1, 1010

By exploiting human-uploaded web videos as weak supervision, we may train a system that learns what good videos look like, and tries to automatically direct a virtual camera through precaptured 360-degree videos to try to produce human-like videos.

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010

An agent's continuous visual observations include information about how the world responds to its actions. This can provide an effective source of self-supervision for learning visual representations.

Jan 1, 1010