Jan 1, 1010

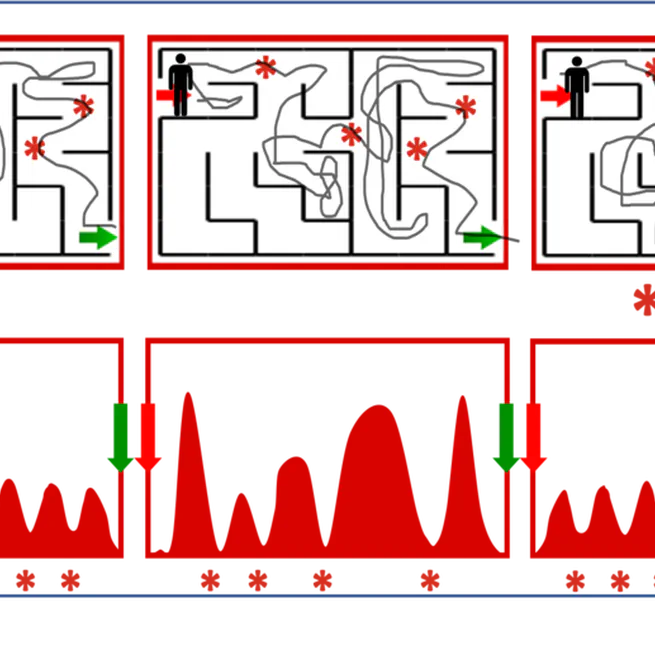

To plan towards long-term goals through visual prediction, we propose a model based on two key ideas: (i) predict in a goal-conditioned way to restrict planning only to useful sequences, and (ii) recursively decompose the goal-conditioned prediction task into an increasingly fine series of subgoals.

Jun 1, 1010

How to train RL agents safely? We propose to pretrain a model-based agent in a mix of sandbox environments, then plan pessimistically when finetuning in the target environment.

Jun 1, 1010

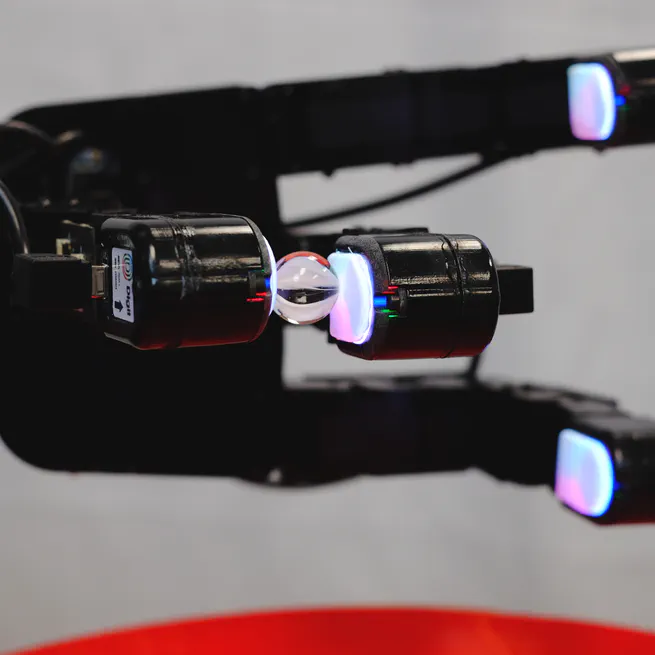

We design and demonstrate a new tactile sensor for in-hand tactile manipulation in a robotic hand.

May 17, 17170

In visual prediction tasks, letting your predictive model choose which times to predict does two things: (i) improves prediction quality, and (ii) leads to semantically coherent "bottleneck state" predictions, which are useful for planning.

Jan 1, 1010

High-resolution tactile sensing together with visual approaches to prediction and planning with deep neural networks enables high-precision tactile servoing tasks.

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010

An agent's continuous visual observations include information about how the world responds to its actions. This can provide an effective source of self-supervision for learning visual representations.

Jan 1, 1010

Jan 1, 1010

Assuming a world that mostly changes smoothly, continuous video streams entail implicit supervision that can be effectively exploited for learning visual representations.

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010

An agent's continuous visual observations include information about how the world responds to its actions. This can provide an effective source of self-supervision for learning visual representations.

Jan 1, 1010