Jan 1, 1010

Sep 13, 13130

Jul 1, 1010

May 15, 15150

Jan 4, 4040

Jan 1, 1010

Jan 1, 1010

Identifying and upsampling important frames from demonstration data can significantly boost imitation learning from histories, and scales easily to complex settings such as autonomous driving from vision.

Oct 8, 8080

Aug 1, 1010

We show empirically that the sample complexity and asymptotic performance of learned non-linear controllers in partially observable settings continues to follow theoretical limits based on the difficulty of state estimation

May 7, 7070

Mar 1, 1010

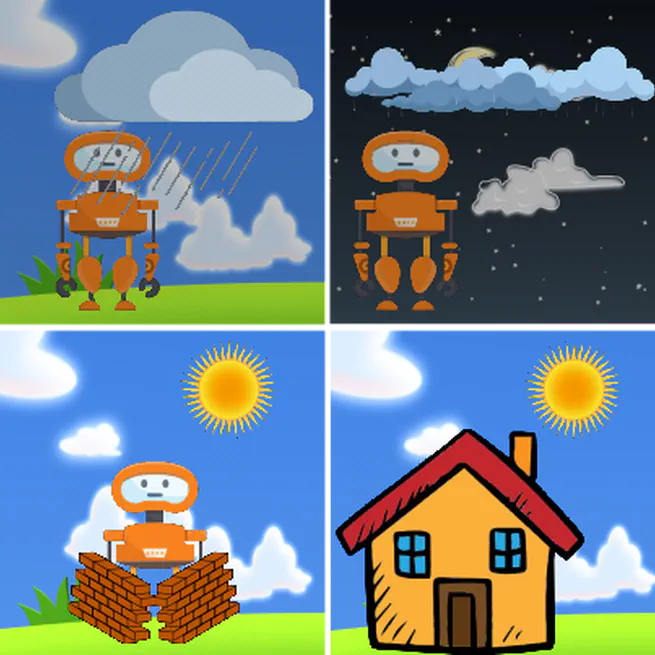

We formulate homeostasis as an intrinsic motivation objective and show interesting emergent behavior from minimizing Bayesian surprise with RL across many environments.

Jan 30, 30300

Oct 15, 15150

To plan towards long-term goals through visual prediction, we propose a model based on two key ideas: (i) predict in a goal-conditioned way to restrict planning only to useful sequences, and (ii) recursively decompose the goal-conditioned prediction task into an increasingly fine series of subgoals.

Jun 1, 1010

How to train RL agents safely? We propose to pretrain a model-based agent in a mix of sandbox environments, then plan pessimistically when finetuning in the target environment.

Jun 1, 1010

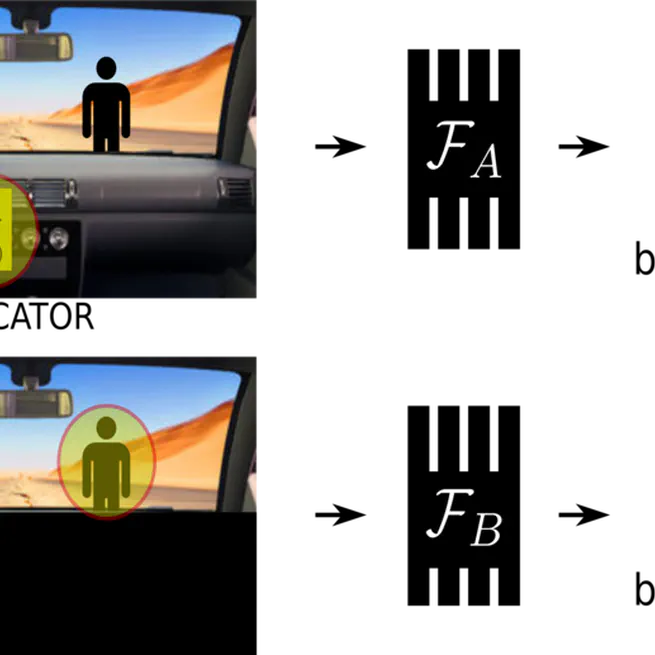

"Causal confusion", where spurious correlates are mistaken to be causes of expert actions, is commonly prevalent in imitation learning, leading to counterintuitive results where additional information can lead to worse task performance. How might one address this?

Dec 12, 12120

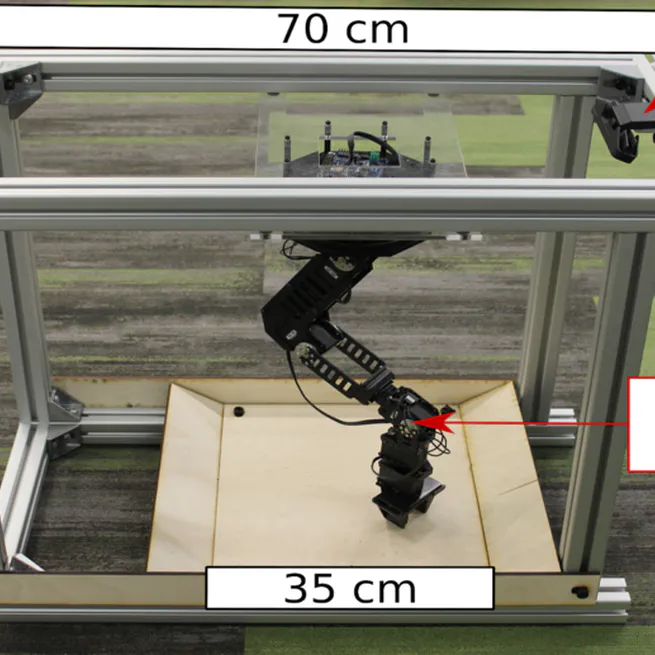

We propose a low-cost compact easily replicable hardware stack for manipulation tasks, that can be assembled within a few hours. We also provide implementations of robot learning algorithms for grasping (supervised learning) and reaching (reinforcement learning). Contributions invited!

Jan 1, 1010

We propose a low-cost compact easily replicable hardware stack for manipulation tasks, that can be assembled within a few hours. We also provide implementations of robot learning algorithms for grasping (supervised learning) and reaching (reinforcement learning). Contributions invited!

Jan 1, 1010

Jan 1, 1010

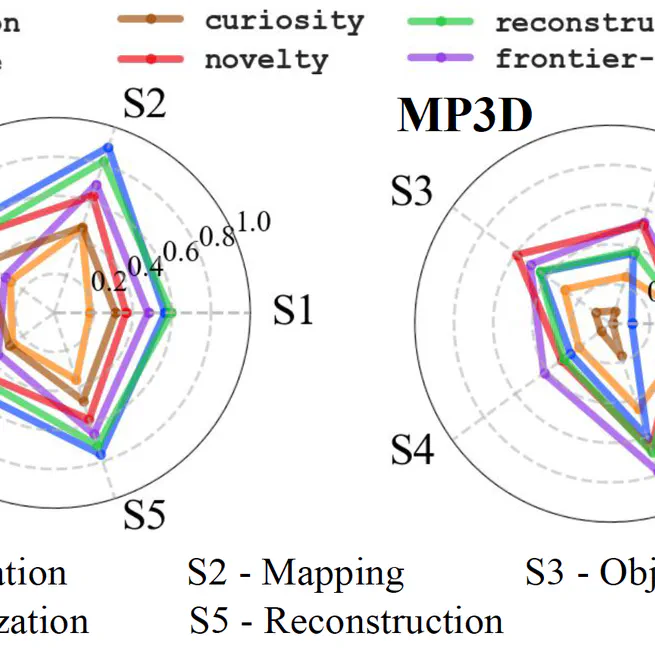

Task-agnostic visual exploration policies may be trained through a proxy "observation completion" task that requires an agent to "paint" unobserved views given a small set of observed views.

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010

Jan 1, 1010

Active visual perception with realistic and complex imagery can be formulated as an end-to-end reinforcement learning problem, the solution to which benefits from additionally exploiting the auxiliary task of action-conditioned future prediction.

Jan 1, 1010