Jul 25, 25250

Mar 1, 1010

We learn reward functions in unsupervised object keypoint space, to allow us to follow third-person demonstrations with model-based RL.

Oct 15, 15150

We demonstrate visual control within 20 seconds on a robot with unknown morphology, from a single uncalibrated RGBD camera.

May 17, 17170

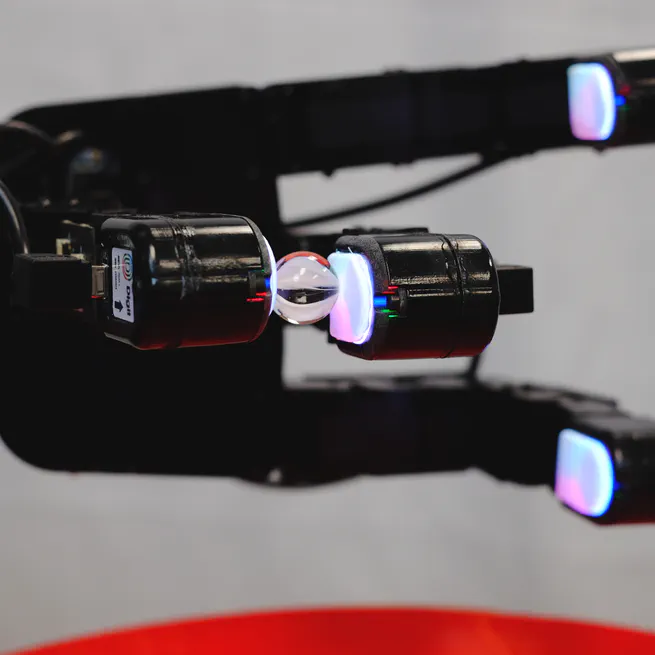

We design and demonstrate a new tactile sensor for in-hand tactile manipulation in a robotic hand.

May 17, 17170

High-resolution tactile sensing together with visual approaches to prediction and planning with deep neural networks enables high-precision tactile servoing tasks.

Jan 1, 1010

Jan 1, 1010

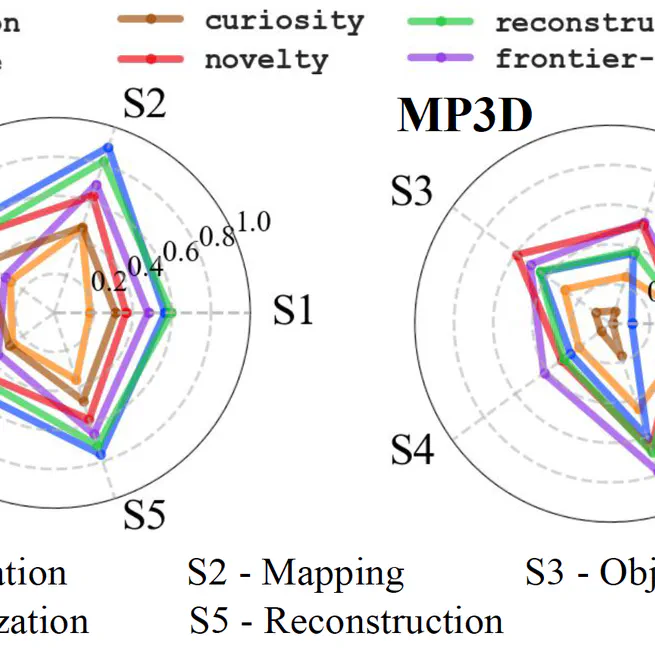

Appearance-based image representations in the form of viewgrids provide a useful framework for learning self-supervised image representations by training a network to reconstruct full object shapes, or scenes.

Jan 1, 1010

By exploiting high precision tactile sensing with deep learning, robots can effectively iteratively adjust their grasp configurations to boost grasping performance from 65% to 94%.

Jan 1, 1010

Task-agnostic visual exploration policies may be trained through a proxy "observation completion" task that requires an agent to "paint" unobserved views given a small set of observed views.

Jan 1, 1010

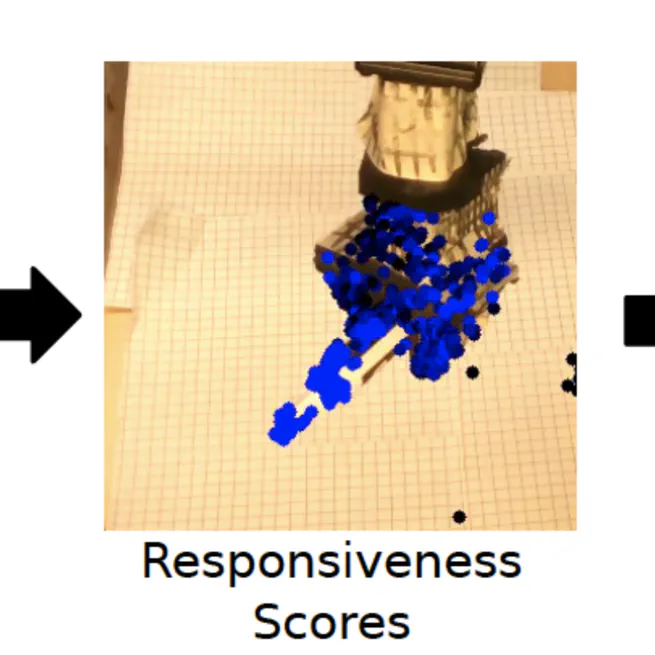

An agent's continuous visual observations include information about how the world responds to its actions. This can provide an effective source of self-supervision for learning visual representations.

Jan 1, 1010