Research

My primary research interests are in the areas of machine learning and artificial intelligence, with a focus on the following topics:

- Lifelong learning of multiple consecutive tasks over long time scales,

- Knowledge transfer between learning tasks, including the automatic cross-domain mapping of knowledge,

- Interactive AI methods that combine system-driven active learning with extensive user-driven control over learning and reasoning processes, and

- Big data analytics through lifelong and transfer learning, with a focus on learning multiple tasks online from streaming data and adjusting for drifting task distributions.

My research applies these methods to problems in autonomous service robotics and precision medicine.

Lifelong Machine Learning

(Related Publications)

Lifelong Machine Learning

(Related Publications)

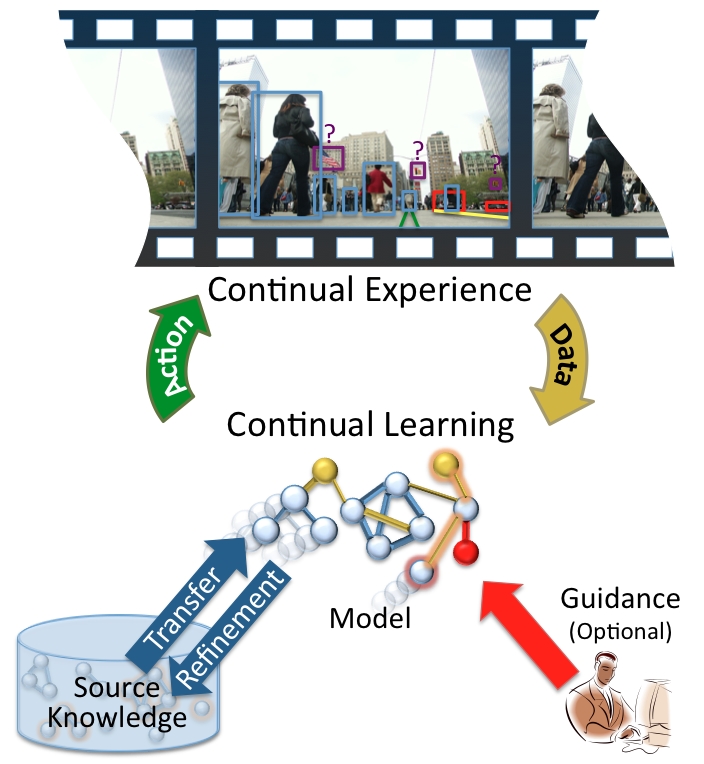

Lifelong learning is essential for an intelligent agent

that will persist in the real world with any amount of versatility.

Animals learn to solve increasingly complex tasks by continually building

upon and refining their knowledge. Virtually every aspect of

higher-level learning and memory involves this process of continual

learning and transfer. In contrast, most current machine

learning methods take a "single-shot" approach in which knowledge is

not retained between learning problems.

My research seeks to develop lifelong machine learning for intelligent

agents situated for extended periods in an environment and faced with

multiple tasks. The agent will continually learn to solve

multiple (possibly interleaved) tasks through a combination of

knowledge transfer from previously learned models, revision of stored

source knowledge from new experience, and optional guidance from

external teachers or human experts. The goal

of this work is to enable persistent agents to develop increasingly

complex abilities over time by continually and synergistically

building upon their knowledge. Lifelong learning could

substantially improve the versatility of learning systems by enabling them

to quickly learn a broad range of complex tasks and adapt to changing

circumstances.

ELLA: Lifelong Learning for Classification and Regression

Under this project, we developed the Efficient Lifelong Learning Algorithm (ELLA) [ICML 2013] -- a method for online multi-task learning of consecutive tasks that has equivalent performance to batch multi-task learning with over a 1,000x speedup. ELLA learns and maintains a repository of shared knowledge, rapidly learning new task models by building upon previous knowledge. ELLA provides a variety of theoretical guarantees on performance and convergence, along with state-of-the-art performance on supervised multi-task learning problems. It also supports active task selection to intelligently choose the next task to learn in order to maximize performance [AAAI 2013].

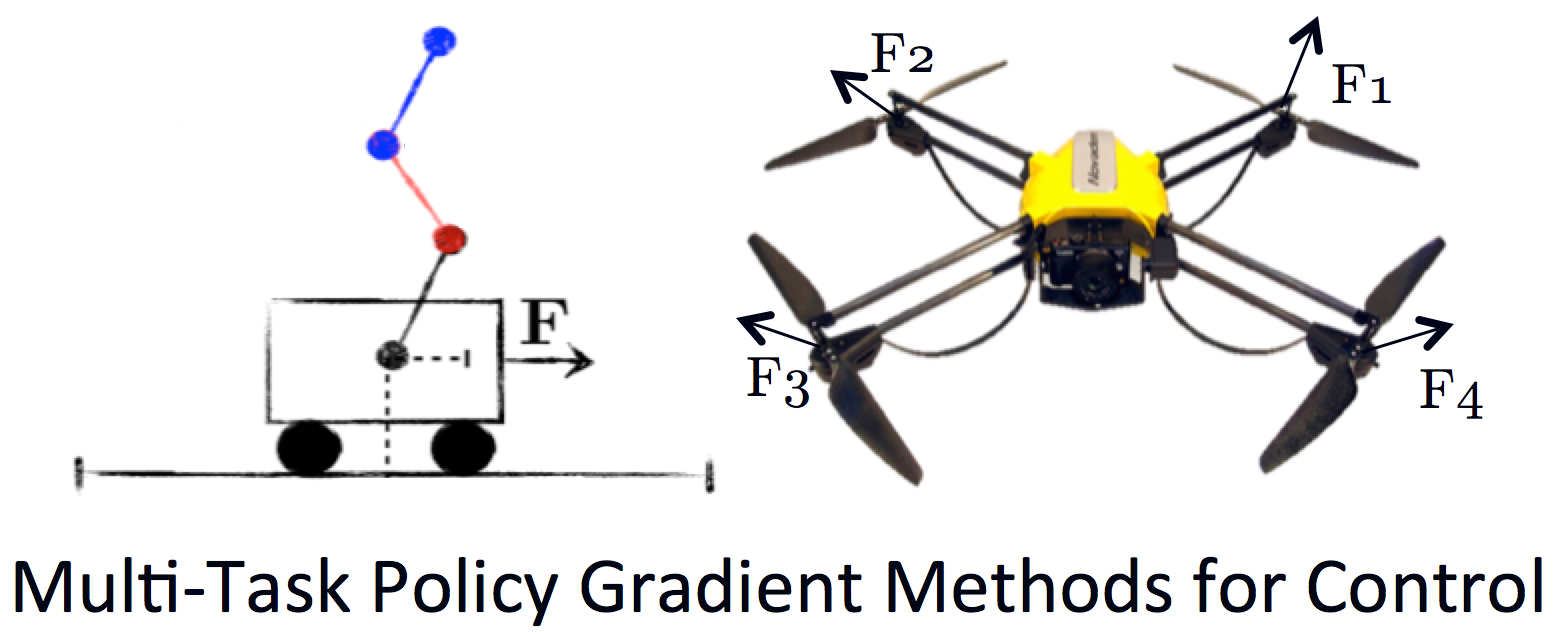

Lifelong Reinforcement Learning for Robotic Control

We extended the ELLA framework to reinforcement learning settings, focusing on policy gradient methods [ICML 2014]. Policy gradient methods support sequential decision making with continuous state and action spaces, and have been use with great success for robotic control. Our PG-ELLA algorithm incorporates ELLA's notion of using a shared basis to transfer knowledge between multiple sequential decision making tasks, and provides a computationally efficient method of learning new control policies by building upon previously learned knowledge. Rapid learning of control policies for new systems is essential to minimize both training time as well as wear-and-tear on the robot. We applied PG-ELLA to learn control policies for a variety of dynamical systems with non-linear and highly chaotic behavior, including an application to quadrotor control. We also developed a fully online variant of this approach with sublinear regret that incorporates safety constraints [ICML 2015], and applied this technique to disturbance compensation in robotics [IROS 2016].

Autonomous Cross-Domain Transfer

Despite their success, these approaches only support transfer between RL problems with the same state-action space. To support lifelong learning over tasks from different domains, we developed an approach for autonomous cross-domain transfer in lifelong learning [IJCAI 2015 Best Paper Nomination]. For the first time, this approach allows transfer between radically different task domains, such as from cart pole balancing to quadrotor control.

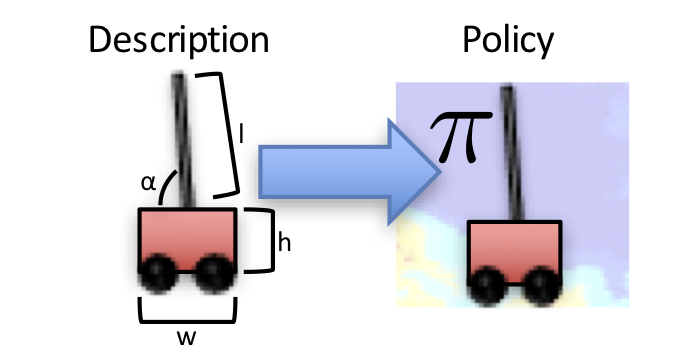

Zero-Shot Transfer in Lifelong Learning using High-Level Descriptors

To further accelerate lifelong learning, we showed that providing the agent with a high level description of each task can both improve transfer performance and support zero-shot transfer [IJCAI 2016 Best Student Paper]. Given only a high level description of a new task, this approach can predict a high performance controller for the new task immediately through zero-shot transfer, allowing the agent to immediately perform on the new task without expending time to gather data before it can perform.

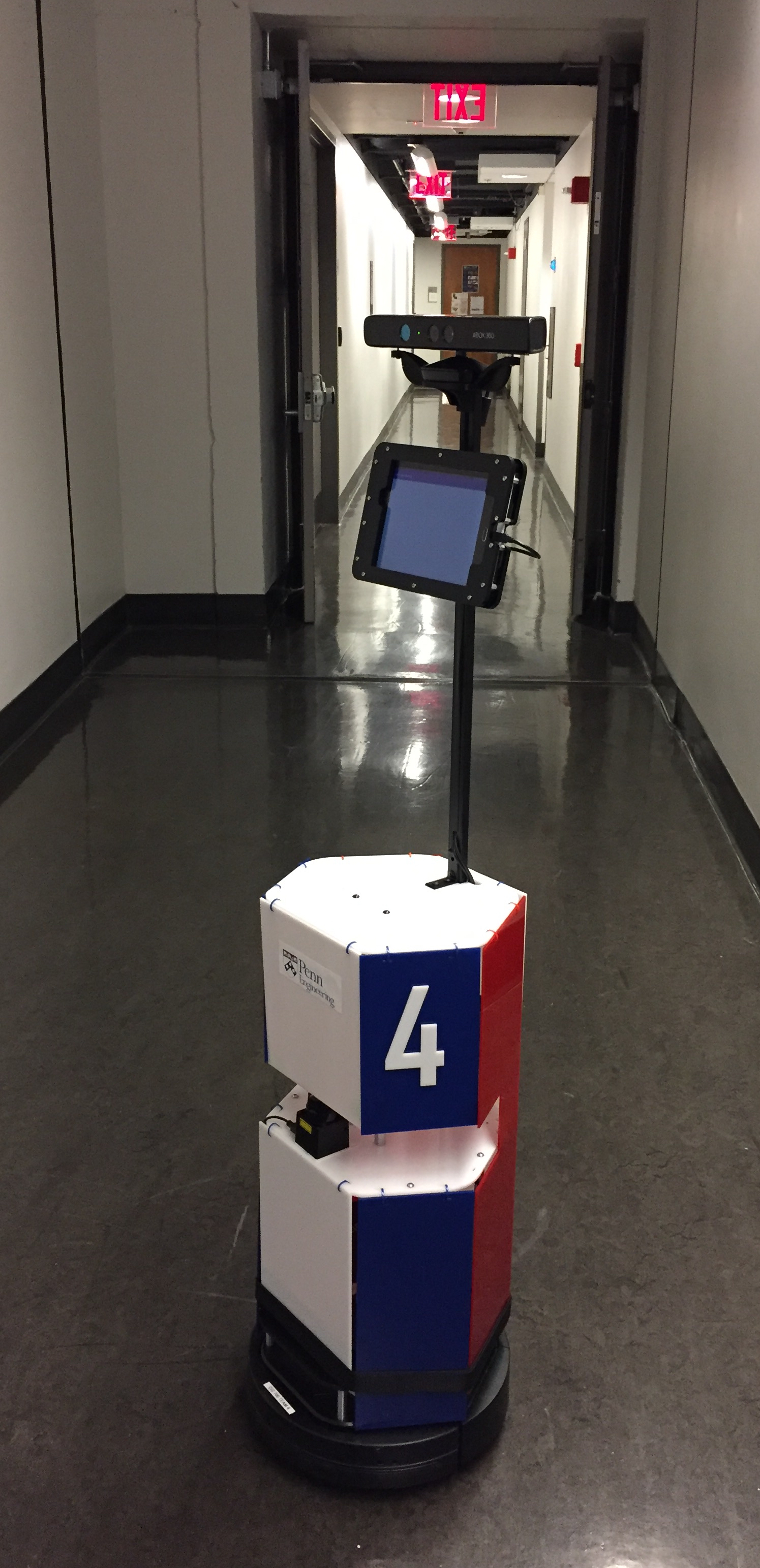

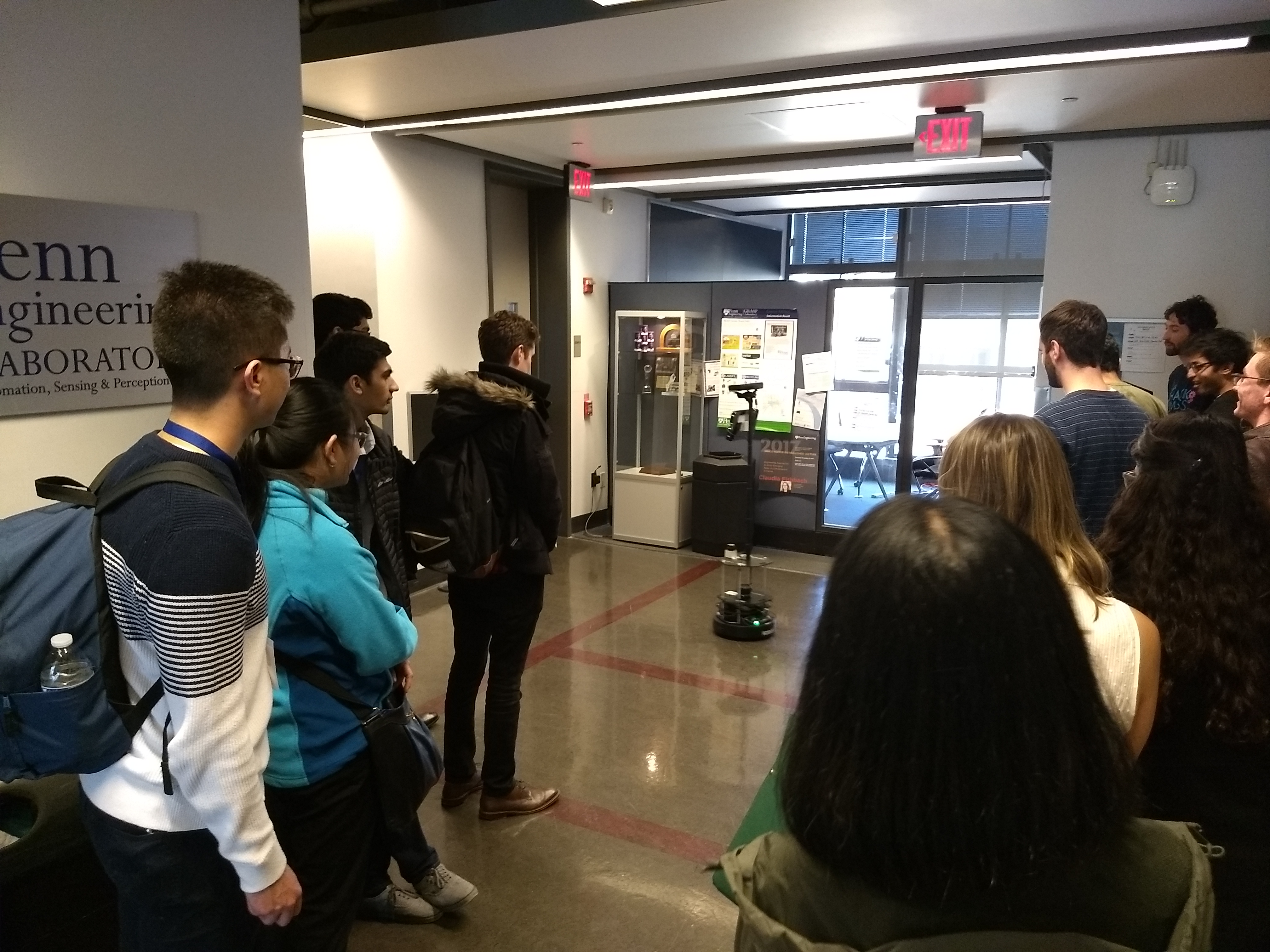

Autonomous Mobile Service Robots

My research group is developing a fleet of low-cost autonomous service robots that can operate continually in university, office, and home environments. These robots will learn a wide variety of skills involving perception, navigation, control, and multi-robot coordination over their lifetime, serving as a major testbed for lifelong machine learning algorithms.

Additionally, visitors to the GRASP lab can be greeted and then taken on a 7-minute tour by the service robots, as shown in the videos below. The photo on the right shows an earlier version of the autonomous service robot giving a tour to prospective PhD students.

Interactive Artificial Intelligence (Related Publications)

Selective Knowledge Transfer (Related Publications)

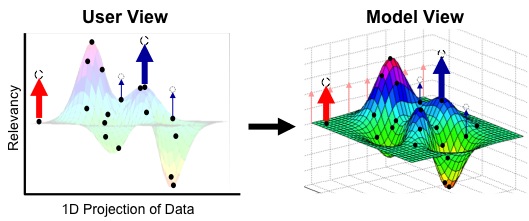

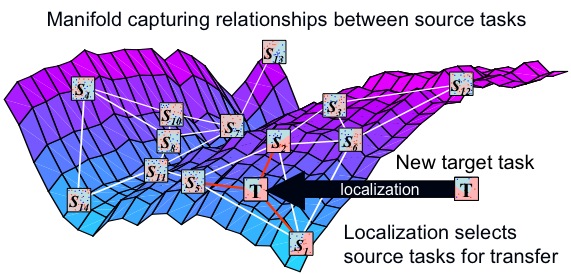

My dissertation research [Eaton, 2009] focused on the problem of source selection in transfer learning: given a set of previously learned source tasks, how can we select the knowledge to transfer in order to best improve learning on a new target task? In this context, a task is a single learning problem, such as learning to recognize a particular visual object. Until my dissertation, the problem of source knowledge selection for transfer learning had received little attention, despite its importance to the development of robust transfer learning algorithms. Previous methods assumed that all source tasks were relevant, including those that provided irrelevant knowledge, which can interfere with learning through the phenomenon of negative transfer.

My dissertation research [Eaton, 2009] focused on the problem of source selection in transfer learning: given a set of previously learned source tasks, how can we select the knowledge to transfer in order to best improve learning on a new target task? In this context, a task is a single learning problem, such as learning to recognize a particular visual object. Until my dissertation, the problem of source knowledge selection for transfer learning had received little attention, despite its importance to the development of robust transfer learning algorithms. Previous methods assumed that all source tasks were relevant, including those that provided irrelevant knowledge, which can interfere with learning through the phenomenon of negative transfer.

My results showed that proper source selection can produce large improvements in transfer performance and decrease the risk of negative transfer by identifying the knowledge that would best improve learning of the new task. This aspect can be measured by the transferability between tasks -- a measure, introduced in my dissertation, of the change in performance between learning with and without transfer.

I developed selective transfer methods based on this notion of transferability for two general scenarios: the transfer of individual training instances [Eaton & desJardins, 2011; Eaton & desJardins, 2009] and the transfer of model components between tasks [Eaton, desJardins & Lane in ECML 2008]. In particular, my research on model-based transfer showed that modeling the transferability relationships between tasks using a manifold provides an effective framework for source knowledge selection, providing a geometric framework for understanding how knowledge is best transferred between learning tasks.

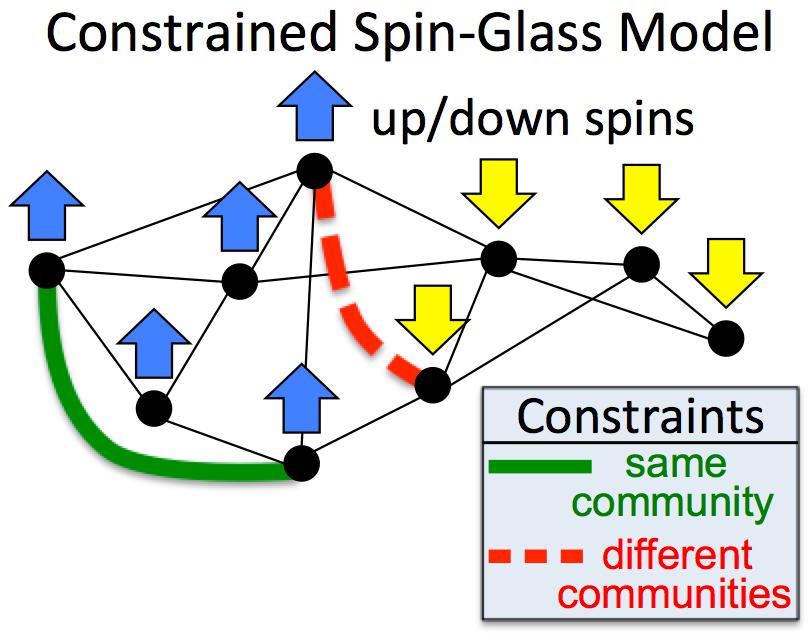

Constrained Clustering (Related

Publications)

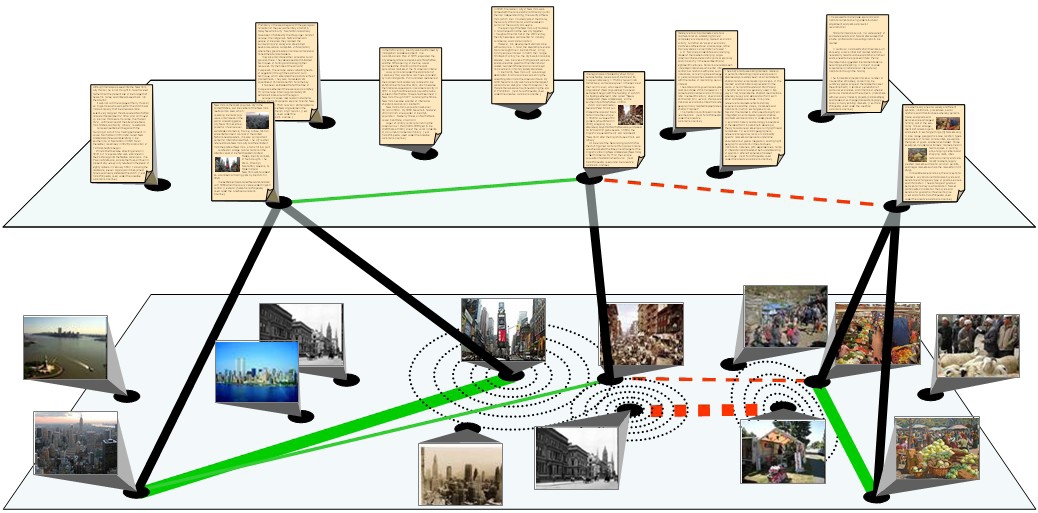

Constrained clustering uses background knowledge in the form of must-link constraints, which specify that two instances belong in the same cluster, and cannot-link constraints, which specify that two instances belong in different clusters, to improve the resulting clustering. My Master's thesis work [Eaton, 2005] focused on a method for propagating a given set of constraints to other data instances based on the cluster geometry, decreasing the number of constraints needed to achieve high performance. This method for constraint propagation was later used as the foundation for the first mult-view constrained clustering method that supports an incomplete mapping between views [Eaton, desJardins, & Jacob in KAIS 2012; Eaton, desJardins, & Jacob in CIKM 2010]. In this method, clustering progress in one view of the data (e.g., images) is propagated via a set of pairwise constraints to improve learning performance in another view (e.g., associated text documents). The key contribution of this work is that it supports an incomplete mapping between views, enabling the method to be successfully applied to a larger range of applications and legacy data sets that have multiple views available for only a limited portion of the data.

Learning

User

Preferences

over

Sets

of

Objects

(Related

Publications)

Learning

User

Preferences

over

Sets

of

Objects

(Related

Publications)

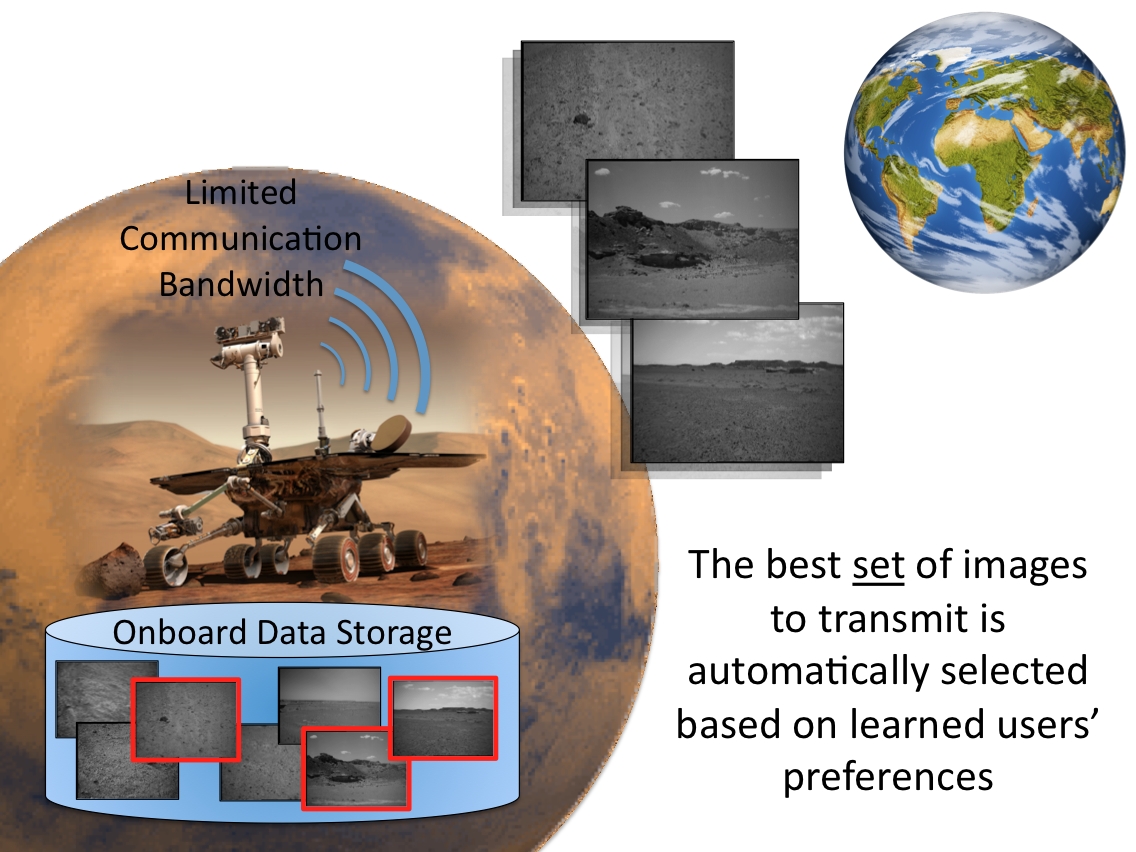

In collaboration with Marie desJardins (UMBC) and Kiri

Wagstaff

(NASA Jet Propulsion Laboratory), I developed the DDPref

framework for

learning and reasoning

about a user's preferences for selecting sets of objects

where items in

the set interact [desJardins,

Eaton

&

Wagstaff,

2006; Wagstaff,

desJardins

& Eaton, 2010]. The DDPref representation captures

interactions

between items within a set, modeling the user's desired

levels of

quality and diversity of items in the set. Our approach

allows a user

to either manually specify a preference representation, or

select

example sets that represent their desired information, from

which we

can learn a representation of their preferences. We applied

the DDPref

method to identify sets of images taken by a remote Mars

rover for

transmission back to the user. Due to the limited

communications

bandwidth, it is important to send back a set of images

which together

captures the user's desired information. This research is

also

applicable to search result set creation, automatic content

presentation, and targeted information acquisition.