Music Library Analysis

Summary of Deliverables

Here’s what you’ll need to submit to Gradescope:

music_library_analysis.ipynb

0. Getting Started

The instructions for this assignment are embedded in the Jupyter Notebook available in the “Music Library Analysis” assignment on Codio. You can complete this assignment just following the directions there. These instructions are preserved on this site just in case you happen to delete your own instructions.

If you need to download starter files or song library files, you can do so here.

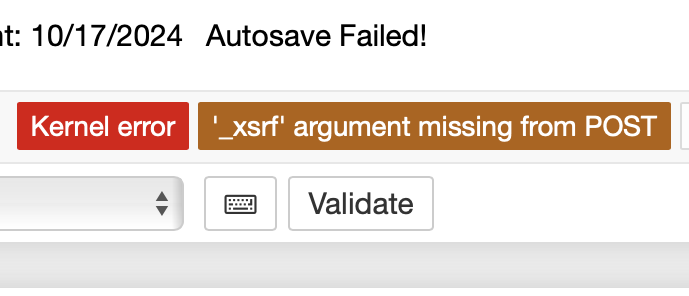

If you use Safari, you might encounter issues running your code. If that’s happening, check to see if you have these two notifications at the top of your notebook.

Make sure to use Chrome or Firefox instead of Safari!

A. Goals

When we use services like Spotify, Apple Music, or Pandora to stream music, we authorize them to collect and store data about us as individuals. This data includes the information we provide directly and the statistics we generate through our listening behavior, which are fairly straightforward and are given with some degree of informed consent. The data they keep on users also includes guesses they make about our behavior. To quote from Spotify’s Privacy Policy, the data they store on each user includes “inferences (i.e., our understanding) of your interests and preferences based on your usage of the Spotify Service.” These inferences have some straightforward uses that benefit us as users, like improving personal recommendations for new music or building procedurally generated playlists. On the other hand, these assumptions that they make about users are also used to sell advertisements on the platform—advertisements that are sometimes personalized to appeal directly to individual users.

This model of data collection is firmly entrenched nowadays, and it has considerable advantages and drawbacks for us as users of these platforms. We benefit from better curation and more efficient delivery of information, but we often have little choice but to offer our personal data up in exchange for commonly desired goods & services.

There are two primary aims of this assignment. The first is the straightforward learning goal of helping you build skills in Data Science. The second is to allow you to practice these skills on data that’s unique to you, giving you some insight into the kinds of inferences that services like Spotify might make about you.

B. Background

For this assignment, you will be writing functions that answer questions about a Spotify user’s taste based on their Liked Songs collection. You can download your own Spotify data using this tool if you want to run your code on your own music library. If you don’t have Spotify, or if you don’t want to use your own data, that’s fine too. You can do your analysis on the provided example files.

If you choose to download your own Spotify data, make sure to download all available data properties by clicking the gear icon on the web app and enabling all “include” options.

C. What You Will Do

First, you will write a function to read a CSV file into a Pandas DataFrame. You will need to make sure that you choose the right settings for read_csv() to make sure that the data is read in the proper formats.

Next, you will perform some exploratory data analysis. You will start by using .shape, .info(), and .describe() to answer a few general questions about the size and structure of your data. These questions will be answered in the submitted README file. You will also use Pandas’ plotting features to reveal the distributions of several of the columns in the dataset.

Then, you will write a handful of functions using Pandas methods that answer some more targeted questions about the dataset. For example, here you will write code to look up songs that are performed by or that feature a particular artist.

Finally, you will examine a walkthrough of how to interact with a predictive model about a user’s taste. You’ll respond to a few questions.

D. Collaboration & Outside Resources

There are two slight tweaks to the typical collaboration policy.

- You are allowed to use the official Pandas documentation as a resource for this assignment. You can find it here. It’s also acceptable to use search engines to help you navigate the documentation. Googling “pandas explode()” is acceptable as a way of finding a link to the relevant page in the Pandas documentation. Modern browsers will often suggest “AI” results, too. It’s unreasonable for us to insist that you avert your eyes from looking at those responses, but be aware that they might lead you down rabbit holes you’re not prepared to follow. (Beware

.apply()…) You must limit yourself to course resources and Pandas documentation for references—if you end up using tons of features we never covered in class, we may follow up and ask you to explain how your solution works… - For the final section containing open-ended questions that ask you to think critically about the model that we build, you’re welcome to discuss with a partner. If you do, you are expected to give a one sentence summary of your discussion—did you agree from the start, did one of you change the other’s mind, or do you two still disagree about an answer?

1. Reading Data

For this section, you will implement the read_songs() function. This function will take in a string representing the path to a CSV file of songs and it should return a DataFrame containing all of those songs, one per row.

There are quite a lot of columns in the dataset! Here’s a full list of them.

"Track URI","Track Name","Artist URI(s)","Artist Name(s)","Album URI","Album Name","Album Artist URI(s)","Album Artist Name(s)","Album Release Date","Album Image URL","Disc Number","Track Number","Track Duration (ms)","Track Preview URL","Explicit","Popularity","ISRC","Added By","Added At","Artist Genres","Danceability","Energy","Key","Loudness","Mode","Speechiness","Acousticness","Instrumentalness","Liveness","Valence","Tempo","Time Signature","Album Genres","Label","Copyrights"

At the end of this writeup, there’s a full description of the purpose of each column. We won’t use all of them.

Pandas’ read_csv() function allows you to create a DataFrame that contains the data stored inside of a given CSV file. The function has a huge number of options that you can pass in as keyword arguments in order to change how the CSV file is interpreted. You can review all of these options in the Pandas documentation here. In this case, read_csv() should get all of the data successfully with the default options, but the datatypes that it chooses may not always be the best ones. In particular, the Album Release Date column will be interpreted as a string by default. This is not “wrong”, but it will make certain tasks more difficult later on. How you handle this is up to you—the following are three (of several possible) choices for how to handle this typing issue:

- Choose a setting for

read_csv()that forces Pandas to parse the column as adatetimeobject. (Less code but more documentation reading.) - Replace the column after reading the CSV by using

to_datetime(). In particular, you should be aware that some dates have Day, Month, and Year whereas some have just the Year. You could almost say that the format is “mixed”…(Less code but more documentation reading.) - Keep the column’s type as a string and just parse out the year yourself. (Less reading but more code.)

Implement the read_songs() function. In Checkpoint 1, use the head() method to inspect the first few rows of your DataFrame and make sure that it looks OK!

def read_songs(filename):

pass

Use head() to inspect the first few rows of your DataFrame and make sure that it looks OK!

df = read_songs('medium.csv')

df.head()

2. Exploratory Data Analysis

A. What Does Your DataFrame Look Like?

It’s important to understand the broad shape of the data you plan to work with: How much of it do you have? What columns does it consist of? Do any of these columns seem more or less useful? What values are typical in each of the columns?

In the following cells, you’ll find a number of questions and space for you to answer them. The cells with the questions are text cells, meaning that what you write in them is not considered Python code. You can type whatever you want, just like you see here. There are also a few empty code cells that you can use to explore your DataFrame—head(), shape, info(), and describe() will all be useful here. When you submit this notebook, keep your work in these code cells! They show us how you answered the more general questions.

EDA Question 1:

How many songs are in the sharry_songs.csv dataset? How many columns are there?

Answer:

Replace this text with your answer & justification.

# write pandas code here to answer this question!

EDA Question 2:

Are there any columns that are entirely or mostly contain missing data?

Answer:

Replace this text with your answer & justification.

# write pandas code here to answer this question!

B. Exploring Distributions

There are a bunch of columns included in this dataset that measure characteristics of the songs: "Energy", "Danceability", "Speechiness", "Acousticness", "Instrumentalness", "Liveness", "Valence". These columns all store numeric values between 0 and 1 measuring the degree to which each song represents the particular characteristic. For example, a song with a "Danceability" value of 0.9 would be highly “danceable”, a song with a "Valence" of 0.1 will be very sad, and a song with an "Instrumentalness" score of 0.9 will have very few vocals.

A quick way to get a picture of a Spotify user’s taste from their song data would be to plot the distributions of each of these features as a bunch of histograms. The function song_characteristics_distributions(), which generates a “small multiples” visualization, is completed for you. You can run the cell below to define the function. The following cell calls that function on the sharry_songs.csv dataset.

def song_characteristics_distributions(df):

"""Generate a small-multiples plot consisting of histograms of the following song characteristics:

- Energy

- Danceability

- Speechiness

- Acousticness

- Instrumentalness

- Liveness

- Valence

Make sure the resulting plot meets the following requirements. Reading the pandas documentation for

DataFrame.plot() will be helpful.

- Give the overall collection of subplots a helpful title.

- Arrange the plots in a 4x2 grid.

- Each histogram should have 40 bins.

- Make sure each histogram shares the same x-axis scale (from 0-1) with tick labels visible.

- The overall plot should be big enough to read without overlapping subplots.

Args:

df: a pandas.DataFrame

"""

axes = df.plot(kind='hist', y=["Energy", "Danceability", "Speechiness", "Acousticness", "Instrumentalness", "Liveness", "Valence"], bins=40, sharex=False, subplots=True, title='Listener Preferences', layout=(4, 2), figsize=(15,10))

for row in axes:

for ax in row:

ax.set_ylabel(None)

ax.set_xticks([0, 0.2, 0.4, 0.6, 0.8, 1.0])

song_characteristics_distributions(read_songs("sharry_songs.csv"))

EDA Question 3

Based on the figures above, make a claim about Harry’s apparent music preferences. Or, download your own data using the process outlined above, call the function on your own data, and make a claim about your own music preferences!

Replace this text with a statement that appears to be true about the distribution of song values above. Here’s one that would be wrong: Harry has no obvious preference between songs that are instrumental and those that feature vocals.

3. Answering Questions

Once we understand what our data “looks like,” we can formulate more specific questions that we’d like to ask about a user’s library.

Each of the following functions represents a kind of question you might ask about a user’s library. Implement each of the functions. Keep in mind the Pandas function toolkit we’ve developed in class, and make sure to have the Pandas documentation close at hand.

There are also edge cases defined for each of the functions below.

The functions are presented in roughly increasing order of complexity. Aim to complete the first two in one or two lines. The following ones will require a bit more work.

Standard Deviation Tools & Tips:

You’ll probably find the .std() function helpful here. Remember that you need to return the standard deviation of a single column, so you’ll need to select the proper one before using .std().

def standard_deviation_of_energy(df):

"""Calculates the standard deviation of the energy values in the library.

The standard deviation is defined as the square root of the average squared

difference between each value and the mean. If the library is empty, return 0.

Args:

df: a pandas.DataFrame

Returns:

a float, the standard deviation of the energy values in the library.

Tests:

>>> standard_deviation_of_energy(read_songs('small.csv'))

0.23676853811956278

>>> standard_deviation_of_energy(read_songs('medium.csv'))

0.23761001852234664

>>> standard_deviation_of_energy(read_songs('sharry_songs.csv'))

0.2304914250538867

"""

pass

Songs Per Year Tools & Tips:

There are a few ways to approach this problem depending on what data type you’re using for the "Album Release Date" column.

If you’re using a datetime object, then you can extract the year value from a Series with the dt.year attribute. The linked documentation has an illuminating example. Once you have each song’s year, you can solve the problem in one or two more steps with either filtering or .value_counts().

If you’re using a str object, then you’ll have to extract the year by parsing that out of the string. Inspect the column manually and see if you can identify which positions the year occupies in the date strings. (Is it the same for both date formats found in the file?) You can mimic typical Python string slicing (i.e. s[start:stop:step]) with the Pandas function str.slice() function. Once you have a way of isolating the year, the problem can be solved with filtering or .value_counts().

def count_songs_from_year(df, year):

"""Counts the number of songs from a specific year in the library. If the library is empty, return 0.

Bear in mind that the year argument is passed in as an integer. The "Album Release Date" column in the DataFrame

Args:

df: a pandas.DataFrame

year: an int

Returns:

an int, the number of songs from the specified year.

Tests:

>>> count_songs_from_year(read_songs('small.csv'), 2015)

4

>>> count_songs_from_year(read_songs('medium.csv'), 2018)

14

>>> count_songs_from_year(read_songs('sharry_songs.csv'), 2020)

78

"""

pass

Popularity Range Tools & Tips:

There are a bunch of ways to solve this one. A crucial element of the problem is excluding those rows with popularity values of 0, and that can be done with a standard filter.

To calculate the range, you need the biggest and smallest values in the series. You could get this by calling sort_values() on a Series and then using .iloc[] to access the proper values. Or maybe there are some other functions that might help: max() and min(), perhaps?

def calculate_popularity_range(df):

"""Calculate the range of popularity values in the library.

Ignore all songs with popularity value of 0. (This is a placeholder value for songs with unknown popularity.)

If the library is empty, return -1.

Args:

df: a pandas.DataFrame

Returns:

an int, the range of popularity values in the library (highest popularity - lowest popularity)

Tests:

>>> calculate_popularity_range(read_songs('small.csv'))

29

>>> calculate_popularity_range(read_songs('medium.csv'))

76

>>> calculate_popularity_range(read_songs('sharry_songs.csv'))

92

"""

pass

Song Name with Most Genres Tools & Tips:

Note that the "Artist Genres" column stores strings the genres separated by commas. As a reminder, here are some important string/list processing functions in Pandas. You won’t need all of them.

str.count(substring)counts the occurrences of a substring in a stringstr.contains(substring)returnsTrueif the substring is present in the larger string in that cellstr.split(sep)splits the string into a list of substrings separated bysepstr.len()finds the length of the string (or list!) in this cell and returns it as a number

Keep in mind that you need to break ties by selecting the song that appears closer to the top of the DataFrame. When you filter rows out of a DataFrame, the order of the rows is otherwise preserved. You can use this to your advantage.

def song_name_with_most_genres(df):

"""Finds the song with the most genres in the library and returns its name.

If the library is empty, return None.

If there are multiple songs with the same number of genres, return the one that appears earlier in the library.

Args:

df: a pandas.DataFrame

Returns:

a string, the name of the song with the most genres.

Tests:

>>> song_name_with_most_genres(read_songs('small.csv'))

'Give Your Heart Away'

>>> song_name_with_most_genres(read_songs('medium.csv'))

'american dream'

>>> song_name_with_most_genres(read_songs('sharry_songs.csv'))

'...And The World Laughs With You'

"""

pass

Song Name with Most Genres Tools & Tips:

This problem deals with string processing again, so here are some important string/list processing functions in Pandas. You won’t need all of them.

str.count(substring)counts the occurrences of a substring in a stringstr.contains(substring)returnsTrueif the substring is present in the larger string in that cellstr.split(sep)splits the string into a list of substrings separated bysepstr.len()finds the length of the string (or list!) in this cell and returns it as a number

🤯 Exploding the DataFrame might be useful. Remember that this function works by expanding the DataFrame to include many “duplicate” rows, creating each one of these duplicates for each value in the list cell that’s being exploded. There are probably half a dozen ways of doing this, though, so you may have some luck perusing the Pandas documentation to find an answer. Just keep in mind that you want to match on full artist names. If I’m searching for songs by "Future", you should not return any songs by the artist "Future Islands".

def find_songs_with_artist(df, artist):

"""Selects all songs by a specific artist in the library.

Keep in mind that a song may have many artists. The song

should be included in the output if *any* of its artists

match the input. You should not modify the input DataFrame, but

you can create a copy using the .copy() method.

If the library is empty, the DataFrame returned should be empty.

Args:

df: a pandas.DataFrame

artist: a string

Returns:

a DataFrame containing only songs featuring the specified artist.

Tests:

>>> find_songs_with_artist(read_songs('medium.csv'), 'Slash').shape[0]

2

>>> find_songs_with_artist(read_songs('medium.csv'), 'Slash')['Track Name'].iloc[0]

'Starlight (feat. Myles Kennedy)'

>>> find_songs_with_artist(read_songs('medium.csv'), 'Slash')['Track Name'].iloc[1]

'Watch This'

>>> find_songs_with_artist(read_songs('sharry_songs.csv'), 'Phoebe Bridgers').shape[0]

13

>>> find_songs_with_artist(read_songs('sharry_songs.csv'), 'Phoebe Bridgers')['Track Name'].iloc[0]

'Leonard Cohen'

>>> find_songs_with_artist(read_songs('sharry_songs.csv'), 'Phoebe Bridgers')['Track Name'].iloc[1]

'Motion Sickness'

>>> find_songs_with_artist(read_songs('sharry_songs.csv'), 'Phoebe Bridgers')['Track Name'].iloc[12]

'Dominos'

"""

pass

4. Tracking Genres over Time

Spotify has a huge set of music genres that it uses to tag each song. Some of the genres they use are remarkably specific (“duluth indie”), totally obtuse (“bubblegrunge”), or just plain silly (“y’alternative” 🤠). It’s possible to get a sense of what a genre is supposed to refer to by listening to songs tagged with it, but it’s also useful to understand the genres in the context of the timeframe from which its constituent songs come. Furthermore, many genres of music evolve significantly over time—compare Future to Run-DMC or Johnny Cash to Florida Georgia Line, for example.

For this next task, help by completing the genre_frequency_over_time() function to generate a bar chart counting the number of songs matching a given genre in all years between its first appearance in the dataset to its last. The chart must be a bar chart with a title that displays the name of the genre and helpful x- and y- axes labels. (You can make it look nicer if you like—coloring the bars by release year could be a lot of fun!) By using plot(), you should be able to finish this task in just one or two additional lines of code. Keep in mind that by_year—the data you should be plotting—is a Series, so you won’t need to specify the columns to use for the x and y dimensions.

def genre_frequency_over_time(df, genre):

"""Generate a bar plot showing the number of songs of a specific genre by year.

The x-axis should be the year, and the y-axis should be the number of songs.

The first year and last years should be the first and last years that the genre

appears in the library. All intermediate years should be included, even if there are no songs

with that genre in that year. reindex() is useful for this.

Make sure to add axis labels and a title that mentions the name of the genre being plotted.

Args:

df: a pandas.DataFrame

genre: a string

Returns:

None

"""

copied = df.copy()

copied["Artist Genres"] = copied["Artist Genres"].str.split(",")

exploded = copied.explode('Artist Genres')

exploded = exploded[exploded["Artist Genres"] == genre]

exploded["Album Release Date"] = pd.to_datetime(exploded["Album Release Date"], format="mixed")

by_year = exploded.groupby(exploded["Album Release Date"].dt.year).size()

by_year = by_year.reindex(range(by_year.index.min(), by_year.index.max() + 1), fill_value=0)

## ADD CODE HERE!

by_year.plot(kind='bar', title=f'Number of {genre} songs by year', xlabel='Year', ylabel='Number of songs')

genre_frequency_over_time(read_songs('sharry_songs.csv'), 'indie pop')

This cell here runs all of the tests that are encoded into the function docstrings above. It’s a nice way of testing your output. You can add your own tests, too.

import doctest

doctest.testmod(verbose=True)

5. What Is My Kind of Song?

For the final part of this assignment, you are given a model that is designed to estimate the probability of whether some new song would fit into my library or not. You are tasked with running some cells here and thinking critically about the model as it has been described. You will not write code here, but you will have to answer a few open-ended questions and provide some justification.

The main hypothesis that drives the model we have built is that the songs that I have saved to my library might tend to fit a certain profile. Maybe my taste is, for example, happy, high-energy, acoustic-sounding music. In order to do that, it will be important to have examples of songs that are not currently among my Liked Songs. These come from an anonymous friend—let’s call her “E”—and are found in the file called other_songs.csv. By introducing songs that come from another library—from another person with a different taste for music—we might introduce some examples that have notably different musical profiles. This will give our model a notion of what music outside of my personal taste might look like so that it can learn to identify examples of it. Then, the model will be tasked with estimating the probability that a song with a certain profile comes from my library instead of E’s library.

The model is actually built using the steps outlined in the included building_a_model.ipynb. You do not have to read anything or run any code found in that notebook, but it does show you how the model is built. We can load the model using the following cell:

import pickle

with open('model.pkl', 'rb') as f:

model = pickle.load(f)

# This will print a summary of the model, which helps to confirm that

# the model was loaded correctly. For more info on some of these numbers,

# you can check out building_a_model.ipynb

model.summary()

Let’s see how well the model works on three different songs that I do really like, but that are not present in sharry_songs.csv. In each case, I have queried Spotify to get the song features for these songs and copied them manually into three individual DataFrames. Make sure to run the following cell.

dirty_laundry = pd.DataFrame({

"Track Name": "Dirty Laundry",

"Artist Name(s)" : "Cayetana",

"Acousticness": 0.0021,

"Danceability": 0.319,

"Energy": 0.892,

"Instrumentalness": 0.0528,

"Liveness": 0.187,

"Speechiness": 0.054,

"Valence": 0.749

}, index=[0])

unlimited_love = pd.DataFrame({

"Track Name": "Unlimited Love",

"Artist Name(s)": "thanks for coming",

"Acousticness": 0.574,

"Danceability": 0.851,

"Energy": 0.537,

"Instrumentalness": 0,

"Liveness": 0.302,

"Speechiness": 0.0525,

"Valence": 0.965,

"track_href": "https://api.spotify.com/v1/tracks/7I8DFjHKnjZP2ExgK5gqHK"

}, index=[0])

red_wine_supernova = pd.DataFrame({

"Track Name": "Red Wine Supernova",

"Artist Name(s)": "Chappell Roan",

"Acousticness": 0.0176,

"Danceability": 0.657,

"Duration_ms": 192721,

"Energy": 0.82,

"Instrumentalness": 0,

"Liveness": 0.0847,

"Speechiness": 0.0441,

"Valence": 0.709,

"track_href": "https://api.spotify.com/v1/tracks/7FOgcfdz9Nx5V9lCNXdBYv"

}, index=[0])

We can ask what the model thinks about the probability of each song being mine by calling the predict() method:

model.predict(dirty_laundry)

The model estimates a $93\%$ probability that this song is one of mine instead of being one of E’s. That is a fair bet in this case—I think that the lo-fi indie punk sound is one that I like much more than her. OK, so what about the next one?

model.predict(unlimited_love)

Well, “Unlimited Love” is a recent favorite song of mine, but the model seems to think it’s more likely to be one of E’s songs! (Only a $31.8\%$ of being mine, so a $68.2\%$ chance of being hers…) Curious—it doesn’t feel so far off from a lot of stuff I like, but perhaps it’s statistically more like E’s music.

How about the last one? It’s a little out of my wheelhouse, but I’ve been enjoying this Chappell Roan song recently. Let’s see what the model thinks about the chances of it being one of mine instead of one of E’s.

model.predict(red_wine_supernova)

OK, so not so surprising to the model that I would like the song—apparently it’s got a $77.5\%$ chance of being mine instead of E’s. But wait a second… E actually beat me to the punch in this case, and the song is already present in her library! We actually told the model that “Red Wine Supernova” is one of E’s songs when we were teaching it the difference between my songs and hers. This reveals one of the challenges of probabilistic models trained on lots of data: models like this are responsible for capturing large, overarching patterns, and so the model can learn patterns that contradict individual examples.

Questions

Please answer the following questions and give your best answer. You are encouraged to keep your answer to just a few sentences in each case, but you should make sure to give your answer some justification. There are several acceptable answers to each question. You are welcome to discuss these questions with a partner, but if you do, you must cite each other and also describe your discussion: Did you come to the same conclusion? Did you propose two different answers?

Q1

In order to train our model, we needed examples of songs that were “Harry Songs” and “not Harry Songs.” How did we find examples of “not Harry Songs?” What are the implications of the “not Harry Songs” that we used for the kinds of conclusions that we can draw based on this model?

Q2

Notice that when we call the predict() function from our model, we get a float representing the probability of a song being a “Harry Song.” Why might it be a good thing that the model outputs probabilities and not booleans/”yes or no” answers?

Q3

Reflect on the results of the three predictions we asked the model to make. Do they make you feel that the model is trustworthy or untrustworthy, or do you feel that you don’t have enough information? How could we get a better sense of how good the model actually is?

Q4

Conclude this assignment by making a suggestion about how we could make this model better. (“Better” is a subjective term that could have plenty of meanings in this case, so please explain in what way you think your suggestion will actually make things better!)

6. Readme & Submission

A. Readme

Complete the readme questions at the end of music_library_analysis.ipynb—no need for a separate readme file this time.

B. Submission

Submit music_library_analysis.ipynb on Gradescope.

Your code will be tested for style & runtime errors upon submission. Please note that your functions are being unit tested, and so they must work when run in isolation outside of the context of the notebook. Don’t have your functions rely on variables declared in other portions of the notebook!

Please note that the autograder does not reflect your complete grade for this assignment since your plot will be manually graded.

If you encounter any autograder-related issues, please make a private post on Ed.

7. Appendix (Data Overview)

Here is a brief overview of all of the columns included in the dataset.

| Column ID | Description |

|---|---|

| Track URI | Link to the song on Spotify |

| Track Name | The full title of the song |

| Artist URI(s) | Links to each artist performing on the song |

| Artist Name(s) | Names of each artist performing on the song |

| Album URI | Link to the album on which the song appears |

| Album Name | Title of the album on which the song appears |

| Album Artist URI(s) | Links to each artist or artists responsible for the entire album |

| Album Artist Name(s) | Names of the artist or artists responsible for the entire album |

| Album Release Date | The date when the album was released |

| Album Image URL | A URL linking to an image or cover art of the album |

| Disc Number | The disc number if the album is part of a multi-disc release |

| Track Number | The position of the track within the album’s tracklist |

| Track Duration (ms) | The duration of the track in milliseconds |

| Track Preview URL | A URL to a preview or sample of the track |

| Explicit | Indicates whether the track contains explicit content (e.g., explicit lyrics) |

| Popularity | A measure of the track’s popularity on the platform |

| ISRC | International Standard Recording Code, a unique identifier for recordings |

| Added By | Name or identifier of the user who added the track |

| Added At | Timestamp indicating when the track was added |

| Artist Genres | Genres associated with the artist(s) of the track |

| Danceability | A measure of how suitable the track is for dancing on a scale from 0-1 |

| Energy | A measure of the intensity and activity of the track (0-1) |

| Key | The key in which the track is composed (0 = C, 1 = C#, etc.) |

| Loudness | A measure of the track’s overall loudness (lower is quieter) |

| Mode | Indicates whether the track is in a major (1) or minor key (0) |

| Speechiness | A measure of the presence of spoken words in the track (0-1) |

| Acousticness | A measure of the track’s acoustic qualities (0-1) |

| Instrumentalness | A measure of the track’s instrumental qualities (0-1) |

| Liveness | A measure of the presence of a live audience in the recording (0-1) |

| Valence | A measure of the track’s positivity or happiness (0-1) |

| Tempo | The tempo or beats per minute (BPM) of the track |

| Time Signature | The time signature of the track’s musical structure |

| Album Genres | Genres associated with the album |

| Label | The record label associated with the track or album |

| Copyrights | Information regarding the copyrights associated with the track or album |